WebAssembly and the Elusive Universal Binary

June 2020

Background

The normal process of distributing binaries:

source |

↗ → ↘ |

Linux build Mac build Windows build |

→ → → |

Linux users Mac users Windows users |

Example: My Use Case

wasm-opt

(part of binaryen)

shrinks

WebAssembly (wasm) files by around 20%.

Linux, Mac, and Windows x86_64 builds are used by toolchains like Emscripten (C++) and wasm-pack (Rust).

But some can't use them (e.g. ARM, BSD, special Linux), build infra takes work, sometimes tests only fail there, etc. :(

A "Universal Binary" is the dream of a single executable that runs everywhere and at 100% speed.

source |

→ |

single build |

↗ → ↘ |

Linux users Mac users Windows users |

Not necessarily a literal binary! We want a "Universal Build", really — the details don't matter if it's fast and portable!

Portability

We can distinguish two types:

CPU portability concerns pure computation, lets you run your code no matter the CPU.

OS portability concerns APIs, and lets you print, access files, etc. no matter the operating system.

The Web has one of the best portability stories.

CPU or OS-specific bugs are very rare!

(browser-specific bugs are much more common...)

Anything non-portable is simply disallowed, and plugins are mostly a thing of the past.

Off the Web: Java, .NET, Python, Node.js, and other virtual machines (VMs) provide full CPU portability.

Some operations are OS-specific. Less portable, but more power, which sometimes you want when running your own code on your own server.

If we have C, C++, Rust, or Go — the family of languages that compile to executable code — can we use a VM?

All those can compile to WebAssembly which solves CPU portability! Now, which VMs support it?

Off the web there are various options:

Node.js APIs provide a useful set of OS operations on files (read, write) and processes (spawn, fork), etc.

// No special sandboxing model; like Python etc.

// we get a reasonably-portable set of OS operations.

const fs = require("fs");

const data = fs.readFileSync("data.dat");

// Can provide imports to wasm that use these indirectly

// (just like on the Web).

WASM Runtimes: WASI APIs

The WebAssembly System Interface, meant for non-Web environments.

WASI is not just a bunch of familiar APIs brought to wasm! It is a new approach to writing an OS interface layer, a replacement for something like POSIX.

In particular WASI uses capability-based security and has stricter portability.

The Big Picture for APIs

WASI is supported on Node.js too, not just wasm VMs.

In the long term WASI will likely be the best option for many things, but it is still early.

The strict security & portability will limit what can be done, which as always is a tradeoff.

After that background, let's get back to our concrete example:

wasm-opt |

→ |

|

↗ → ↘ |

OSes, CPUs |

Compiling to WASM VMs?

wasm-opt needs only basic file operations, which WASI supports.

But wasm-opt needs C++ exceptions or setjmp (for an internal wasm interpreter that helps optimize) which WASI does not support yet (wasm exception handling will fix that eventually).

Compiling to wasm on node.js?

Emscripten supports setjmp and C++ exceptions when building to wasm + JS, by calling out to JS:

↑ throw [js]

↑ bar() [wasm]

↑ try-catch [js]

↑ foo() [wasm]

↑ main() [wasm]

Works anywhere with wasm + JS, including on the Web and on Node.js!

Very easy to compile wasm-opt with emcc:

$ emcmake cmake .

$ make -j8 wasm-opt

Defaults are mostly ok, except Emscripten's normal output is sandboxed. To get direct local file access in Node.js, use -s NODERAWFS,

$ emcmake cmake . "-DCMAKE_EXE_LINKER_FLAGS=-s NODERAWFS"

That's it!

# runs almost like a normal executable

$ node wasm-opt.js input.wasm -O -o output.wasm

# output is as expected (note the size improvement)

$ ls -lh input.wasm output.wasm

-rw-r--r-- 23K input.wasm

-rw-r--r-- 18K output.wasm

Great: Full CPU and OS portability!

28% slower — not bad for a portable build.

Not great: Compilation causes a startup delay of about 1 second, even for wasm-opt --help :(

The real solution for startup is wasm code caching, which works on the Web, but not yet on Node.js.

Node.js 12 had an API for code caching (in Emscripten we added -s NODE_CODE_CACHING) but it is gone in Node.js 14+.

The story so far :(

- Can't yet do WASI since no setjmp support

- Can't yet do Node.js since startup is slower

Maybe we should wait until things improve?

wasm2c

Compiles wasm to C, part of wabt, written by binji

|

|

→ |

|

Full workflow:

original source → wasm → C → executable

Very easy to do!

# tell emscripten to use wasm2c

$ emcmake cmake . "-DCMAKE_EXE_LINKER_FLAGS=-s WASM2C"

$ make -j8

# build the C output

$ clang wasm-opt.wasm.c -O2 -lm -o wasm-opt

# runs like a normal executable!

$ ./wasm-opt input.wasm -O -o output.wasm

# same output as before

$ ls -lh input.wasm output.wasm

-rw-r--r-- 23K input.wasm

-rw-r--r-- 18K output.wasm

Wait, isn't all this a little silly?

We started with C++, compiled

to wasm, then to C, which we still need to compile..?!

We've simplified what happens on the user's machine to the simplest possible compilation:

| Dev machine: | User machine: |

- There is a C compiler everywhere — source could be C++20, Rust nightly, etc.!

- Possibly complex build system on dev machine, but a single C file is shipped!

Startup is instantaneous, exactly like a normal executable!

Throughput is just 13% slower (half the overhead of the wasm from earlier) thanks to clang/gcc/etc.!

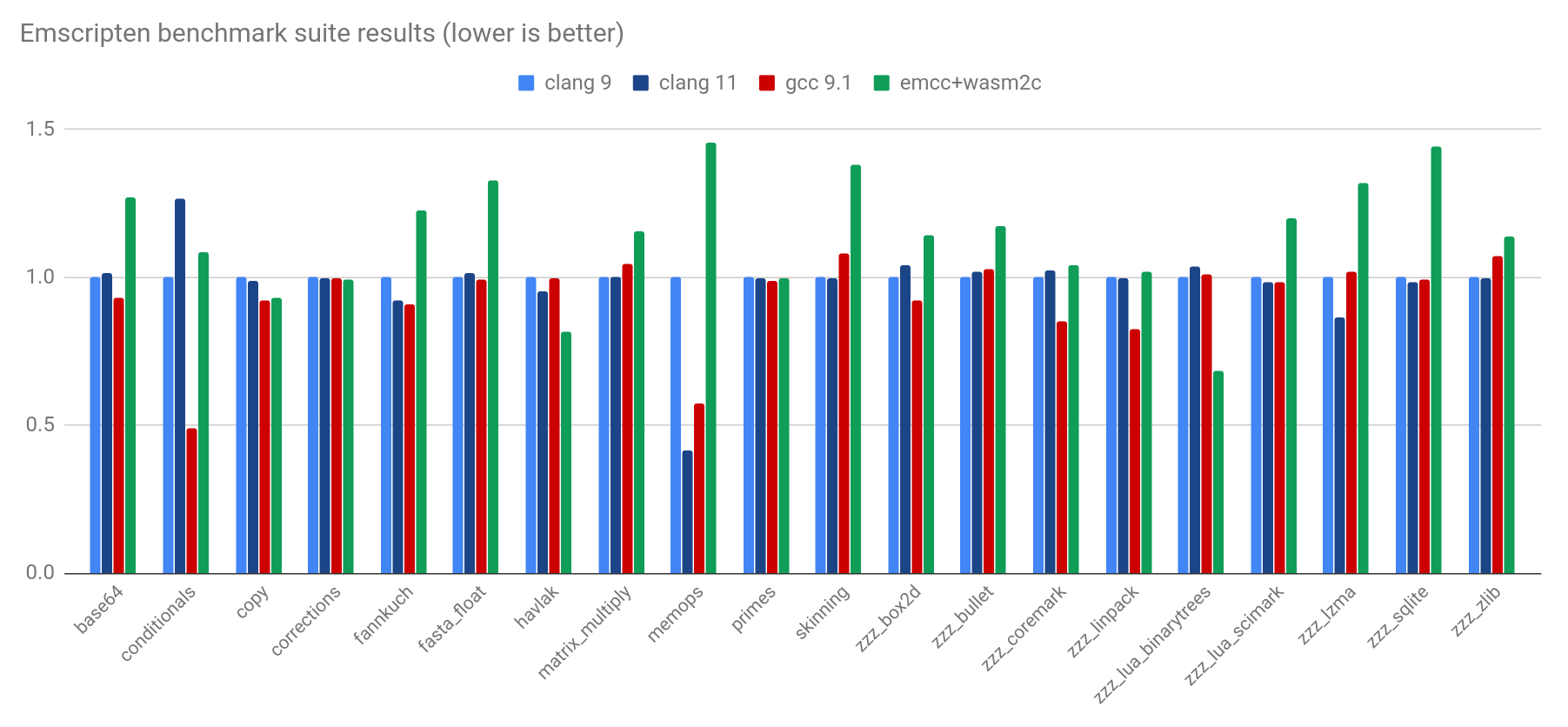

More Benchmarks

Just 14% overhead on average!

A surprising speed benefit

wasm2c is 30% faster on lua-binarytrees, 20% on havlak! How can that be?

Wasm is a 32-bit architecture (so far). So on a 64-bit host, it's an easy way to get x32-like benefits: save memory with half-sized pointers!

wasm2c: VM-less Wasm

100% as portable as wasm in a VM

(the C is portable C)

100% as sandboxed as wasm in a VM

(traps on out of bounds loads, etc.)

(for comparison, wasm2js has slightly different behavior than wasm on corner cases too slow for JS)

APIs?

wasm2c itself is agnostic to APIs: wasm imports become calls to C functions you must provide.

wasm2c can be used with any wasm toolchain, if you make a C runtime with your imports.

In the Emscripten runtime for wasm2c we have impls of various WASI APIs and also e.g. setjmp — which is how we could run wasm-opt and all those benchmarks!

Current status of wasm2c

The C code builds on clang and gcc on all platforms, but need help with MSVC etc. (we use e.g. __builtin_ctlz atm).

Single C file isn't fast to compile with -O2.

Emscripten wasm2c runtime supports almost everything, but still missing e.g. C++ exceptions.

All of this is open source of course - help is welcome!

Conclusion

| source | → |

|

→ |

|

↗ → ↘ |

OSes, CPUs |

A "Universal C Build" works surprisingly well:

- Easy on dev machine: wasm toolchain + wasm2c

- Easy to build on user machine

- Single build for all CPUs and OSes

- Fast start up and throughput

Conclusion (2)

In the long term Node.js, wasm VMs, and WASI will fix the issues we saw, and VMs will generally be the best option!

Yet even then a VM-less approach may be simpler for some things (but no more time in this talk...)

Thank you!

| source | → |

|

→ |

|

↗ → ↘ |

OSes, CPUs |

Questions?